8. DEFEND AGAINST CYBER THREATS | Computer Security

Previous chapter: DEFEND AGAINST PHYSICAL THREATS | Multipolar Active Shields

Security in the physical realm is an extraordinarily hard problem, but an inescapable one. Our civilization now also rests on software infrastructure. Security in the digital realm is now also an inescapable problem. But the current software infrastructure is not just insecure, it is insecurable. Digital security is also extraordinarily hard, but it is differently hard. Reasoning by analogy with physical security is fraught with peril. Let’s begin by explaining how these realms differ, and how to approach computer security.

Security in Digital vs. Physical Realms

Security in the digital realm differs in fundamental ways from security in the physical realm. But all security starts with defensible boundaries.

Physics does not let us build impenetrable walls. We can build stronger and stronger walls, but no matter how strong the wall, there is always a yet stronger force that can break through it. In the digital realm, perfect boundaries are cheap and plentiful. All modern CPUs support address space separation and containment of user-mode processes. Many programming languages support memory safety and object encapsulation. Modern cryptographic algorithms give us separation that seems close enough to perfect. The software built on these boundaries—operating systems, application software, cryptographic protocols—thus could be built securely.

In the physical realm, an attack is costly for the attacker. There is a marginal cost per victim, if nothing else, of the attacker’s attention. A good defense raises the marginal cost of attack. By contrast, software attacks typically have zero marginal cost per victim. Once malware works, its damage can be multiplied by billions using only the victim’s resources. Any vulnerable software system exposed to the outside world will eventually be attacked. We must build invulnerable systems when we can, and otherwise minimize the damage from a successful attack.

With perfect boundaries to build on, why is so much software so hopelessly insecure? The richness of modern software comes from composing specialized software building blocks written by others. These building blocks embody specialized domain knowledge. We compose them so they cooperate and bring more knowledge to bear on the task at hand. Boundaries alone only prevent involuntary interference. To enable cooperation, we poke holes in the boundaries. Without the right architecture, this hole poking makes messes with vulnerabilities no one understands, until it is too late.

In the physical realm, semi-permeable boundaries enable interaction across protective barriers. Canvas blocks light and wind but allows sound. Glass allows light, blocks wind, and attenuates sound. Cell membranes block some chemicals but allow others. In computer security, this is the subject of access control which we look at below in Nested Boundaries and Channels.

The Fatal Risk Threshold is Behind Us

Advances in machine learning have increased awareness that we will eventually build artificial superintelligences. For some, this has become the risk they worry about most. On this developmental pathway, at some point we cross the AI Threshold of having adequate capacity to destroy human civilization. Toby Ord lays out a specific understandable pathway for an AGI takeover:

“First, the AI system could gain access to the internet and hide thousands of backup copies, scattered among insecure computer systems around the world, ready to wake up and continue the job if the original is removed. Even by this point, the AI would be practically impossible to destroy: consider the political obstacles to erasing all hard drives in the world where it may have backups. It could then take over millions of unsecured systems on the internet, forming a large “botnet.” This would be a vast scaling-up of computational resources and provide a platform for escalating power. From there, it could gain financial resources (hacking the bank accounts on those computers) and human resources (using blackmail or propaganda against susceptible people or just paying them with its stolen money). It would then be as powerful as a well-resourced criminal underworld, but much harder to eliminate.”1

None of the steps require any mysterious power but criminals with human-level intelligence are capable of achieving them today using the internet. Our current systems are so vulnerable that they don’t need a superintelligence to destroy them. Why is our world still standing?

One reason is the economics of attack. We have seen many individual systems destroyed, but not civilization as a whole. A coordinated pervasive attack would involve attacking many different systems, exploiting many different vulnerabilities. Currently, this is bottlenecked on the attention of human attackers who discover vulnerabilities and write malware to exploit them. However, static analysis and machine learning is already advanced enough to remove this bottleneck. For a 2018 DARPA Cyber Grand Challenge — a competition designed to show the current state of the art in vulnerability detection and exploitation — a winning system already built malware that autonomously discovered unknown vulnerabilities and successfully exploited them.2 The AI Threshold for a coordinated attack, not bottlenecked on human attention, is already behind us.

In any case, major nation-states, especially including the United States, are not subject to these economic limitations. They have been stockpiling software vulnerabilities and exploiting them quite effectively. However, in peacetime, they mostly use this capacity to spy—to illicitly gather information—rather than to cause visible damage. But their accumulated capacity to cause damage, say in a major cyberwar, is already a threat to civilization. Today, those who could use these technologies to destroy civilization do not want to. But anything experts can use software for today can be easily copied and further automated. We should expect script kiddies to be able to do these things tomorrow. Our insecurable infrastructure may not be survivable much longer. A world safe from AI dangers must first already be safe against cyberwar.

Cyberwar

The U.S. ability to do damage is already so great that further advances in attack abilities provide negligible benefit.3 At the same time, US society is highly dependent on computer systems and thus more vulnerable than many potential adversaries. Our efforts should be redirected from attack to defense. The U.S. electric grid is vulnerable, with damage estimates by Lloyd’s ranging up to $1 trillion.4 Cyber attacks can cause both physical and software damage to the electric grid, and would take months (arguably, years) to repair, leaving entire states without power. Lloyd’s, as an insurance company, estimated financial damages rather than fatalities. But a disaster that reduces civilization’s overall carrying capacity would cause massive starvation.5

We don’t have to look far to get a first understanding of the gravity of a potential attack: In the 2020 attack on SolarWinds, hackers allegedly affiliated with Russia’s SVR, their CIA equivalent, placed corrupted software into the foundational network infrastructure of 30,000 different companies, including many Fortune 500 companies and critical parts of the government. These included the Departments of Homeland Security, Treasury, Commerce and State, posing a “grave risk” to federal, state and local governments, as well as critical infrastructure and the private sector.6 The Energy Department and its National Nuclear Security Administration, which maintains America’s nuclear stockpile, were amongst the compromised targets.

In 2021, The National Security Agency, Cybersecurity and Infrastructure Security Agency (CISA), and Federal Bureau of Investigation (FBI) found Chinese state-sponsored actors aggressively targeting “U.S. and allied political, economic, military, educational, and critical infrastructure personnel and organizations to steal sensitive data, critical and emerging key technologies, intellectual property, and personally identifiable information (PII).” Targets of particular interest include managed service providers, semiconductor companies, the Defense Industrial Base (DIB), universities, and medical institutions.7

The attacks demonstrate that large nation states have certainly accumulated massive attack abilities. If it had been used to disrupt instead of gathering information, it’s hard to imagine the possible losses from this one attack. In fact, we cannot rule out the attacks planting “cyber bombs” that, if detonated, could cause physical destruction.8

The best we can hope for is the current plague of ransomware attacks. It creates a financial incentive to cause visible and painful damage to vulnerable systems. Ransomware rewards attacking systems individually, rather than in a coordinated simultaneous attack across critical systems. Unlike all-out cyberwar, ransomware gives us a chance to incrementally replace each vulnerable system from within a still working world. But this only makes us safer if victims, after paying off the attacker, actually replace their vulnerable systems with secure alternatives. This will not happen until secure alternatives are commercially available.

Build Secure Foundations

Before claiming that any system can be perfectly invulnerable, we need some careful distinctions. We can never achieve zero risk because we can never be certain of anything. That does not mean that we cannot build perfect systems; we can just never be certain we have done so. Instead, we accumulate evidence that increases our confidence that a given system is perfect. Many mathematical proofs are likely perfect. But when checking any one proof, we may make a mistake. Automated proof checking raises our confidence. But even then, the proof checking software may be buggy or deceitful, or we may simply be confused about what a valid proof actually means. Even after automated proof checking, we should seek other evidence to increase our confidence.

Even complex systems can be made amenable to formal proofs that they operate as intended.9 The seL4 operating system microkernel seems to be secure. It has an automated formal proof of end-to-end security. It has also withstood a red team attack—a full-scope, multilayered attack exercise — that no other software has withstood.10 Not only did seL4 survive it, the red team reports they made no progress towards finding exploitable flaws. The strengths and weaknesses of a red team attack are very different from those of a formal proof of security, so seL4 getting an A+ on both is strong evidence that it is actually correct.

Even a perfectly secure foundation is useful only if it provides a useful form of security. A perfect implementation of the wrong architectural principles would be an improvement on the status quo, but would still do us little good. Fortunately, seL4 implements the object-capability or ocap access control architecture—which is the best foundation we know for intelligent systems of voluntary cooperation.

Hardware Supply Chain Risks

Even if the seL4 software is perfectly secure, software runs on hardware. The seL4 proof—in fact virtually all of computer security—assumes the hardware as delivered from the factory is not maliciously corrupted. This assumption is necessary for the software to provide meaningful protection. But we cannot be certain in this assumption.

The proof that a given hardware design is secure only helps if the software runs on the hardware as designed. This assumption sounds trivial but may be false since the hardware may include a manufactured-in trapdoor. The U.S. National Security Agency (NSA) has served national security letters to software companies forcing them to disclose user information. It is possible, indeed likely, that the NSA has already served similar national security letters to hardware companies, including Intel and AMD, requiring them to install trapdoors which the NSA can trigger. Whether or not there is a backdoor purposefully built into the Intel Management Engine, existing widely used hardware has known unknown code built into the production systems by their manufacturers. Most research on hardware security—TPMs,11 HSMs,12 tamper detecting shells, secure bootstrap, CHERI13—only addresses post-manufacture risks. They do nothing to mitigate the risks of backdoors built in from the beginning.

Fearing billion-dollar losses after the 2014 national security letter revelations, IBM’s Robert Weber sent an open letter stating it would not comply with such national security letters.14 However, the severe penalties associated with disobedience or disclosure should make us skeptical. There are already demonstrations of how to build extremely hard to detect exploitable trapdoors at the analog level.15

Even if Weber’s pledge is honest and correct, no manufacturer can build hardware that is both competitive and credibly correct. Unfortunately, all known techniques for building credibly correct machines—such as randomized FPGA layout, public blockchains, proofs of correct execution—are vastly more expensive than merely building correct machines. Fortunately, such credibility is valuable enough for some activities, such as cryptocommerce, to pay these costs. We return to this theme below.

Nested Boundaries and Channels

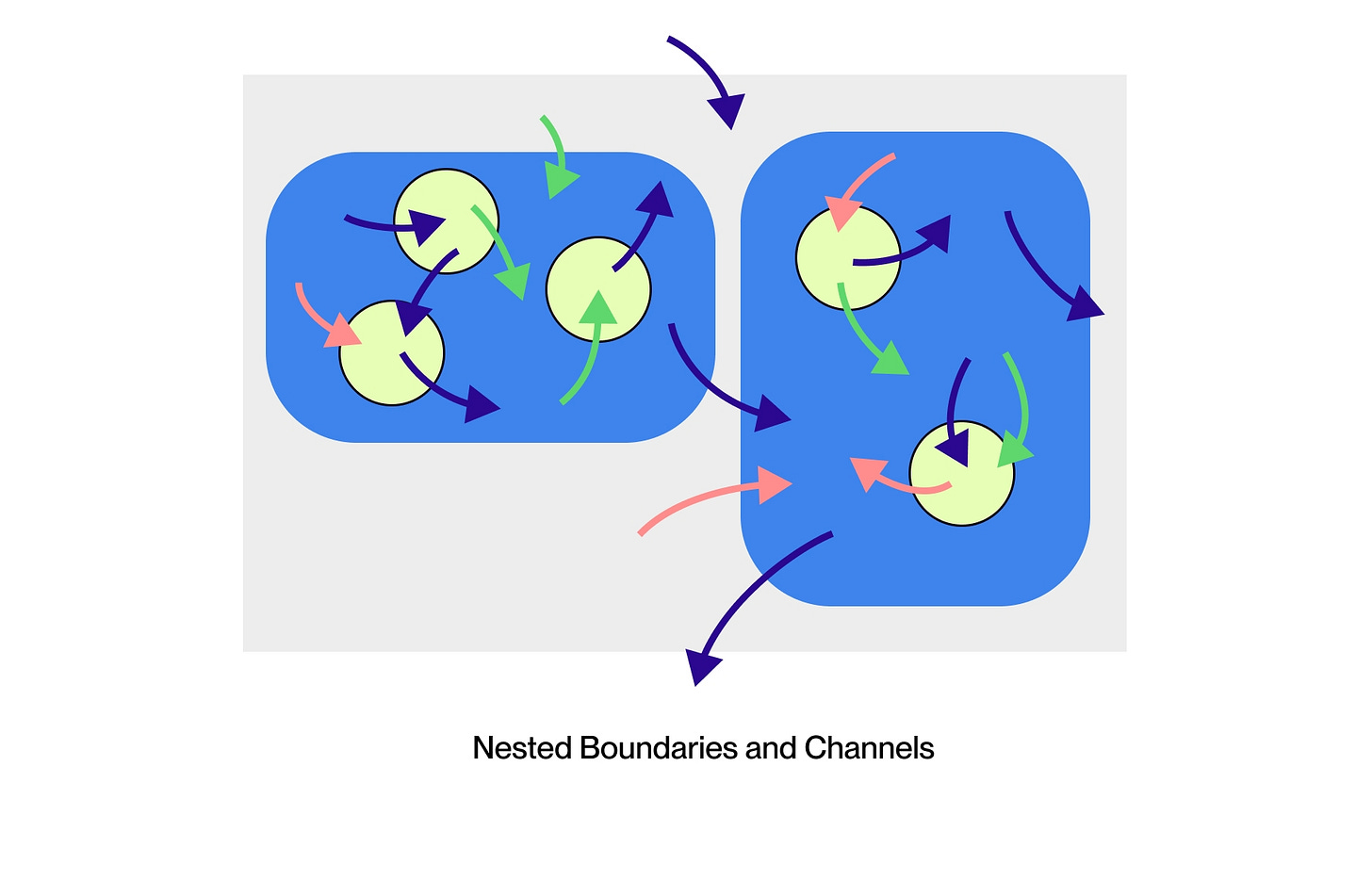

Herbert Simon and John Holland explain that complex adaptive systems—both natural and artificial—have an almost hierarchical nature.16 We are composed of systems at multiple nested granularities, such as organelles, cells, organs, organisms, and organizations. Similarly, we compose software systems at multiple nested granularities, such as functions, classes, modules, processes, machines, and services. At each nesting level, the subsystems are somewhat separated so they can independently evolve. But they also interact to jointly achieve larger purposes. The boundaries between them have built-in channels to selectively allow desired interaction while blocking destructive interference.

In both human markets and software systems, much of the traffic across these channels are requests. Boundaries prevent involuntary interactions. Requests enable cooperation. Both markets and software systems are largely networks of entities making requests of other entities, composing their specialized knowledge into systems of greater aggregate intelligence. Just as you may ask a package delivery service to deliver your father a package containing a birthday gift, a database query processor may ask an array to produce a sorted copy of itself. Every channel carries risks. The package delivery system may damage or lose the package. The array may sort incorrectly or throw an error. Some risks are a necessary consequence of delegating to a separate specialist. In economics, this is known as the principal-agent problem where the requestor is the principal and the receiver is the agent. We expand on the principal-agent perspective in the next chapter.

If the channel is too wide, the request is unnecessarily risky, often massively so. This is the problem of access control. If you give the package delivery system keys to your house, so it can enter and pick up a package, it could pick up anything else. If you enable the sort algorithm to execute with all your account’s permissions, it might delete all your files while operating within the rules of your access control architecture. Computer science has two opposite access control approaches, with complementary strengths and weaknesses. Authorization-based access control is strong on proactive safety but weak on reactive damage control. Identity-based access control is weak on proactive safety but stronger on reactive damage control.

The pervasive insecurability of today’s entrenched software infrastructure is largely due to using identity-based access control for proactive safety. All mainstream systems today use identity-based access control lists or ACLs. In an ACL, each resource has an administered list of the account identities allowed to access it. In these systems, the sort algorithm runs as you. It has permission to delete all your files because you have permission to do so. In 2022, this is the norm.

To eliminate these unnecessary risks, authorization-based access control supports the Principle of Least Authority or POLA. A request receiver should be given just enough access rights to carry out this one request. When you ask the package delivery service to deliver a particular package, you hand them that package. This gives them just enough ability to carry out that request, at the price of only the necessary risks of damaging or losing that one package. This risk reduction supports proactive safety. Some remaining risks can be mitigated or managed by reactive damage control.

Most systems using authorization-based access control, including seL4, are object-capability or ocap systems. In ocap systems, permissions are delegatable bearer rights, where possession grants both the ability to exercise and to further delegate that permission. You gave a clerk a package as part of a request and she will delegate it to the delivery agent. Likewise, these bearer rights are communicated in requests, both to express what the request is about—delivering this specific package—and giving the receiver enough authority to carry out this one request. In object languages, possession of a pointer permits use of the object it points to. Pointers are passed as arguments in requests sent to other objects. Each argument both adds meaning to the request and permits the receiver to use the pointed-to object, in order to carry out the request. OCaps start by recognizing the security properties inherent in this core element of normal programming.

Reactive damage control is best for iterated games, where misbehavior leaves evidence, deterring future attempts to cooperate with the misbehaving entity. If a business loses your package, you stop doing business with them. If you can prove that to others, they may stop as well. In some ways, these problems are duals. For safety, we delegate least authority. For effective deterrence, we assign most responsibility. For example, proof of stake blockchains only detect validator misbehavior after it occurs, but their imposed penalty is severe enough to deter such misbehavior. HORTON (Higher-Order Responsibility Tracking of Objects in Networks) is an identity-based access control pattern for reactively containing damage, assigning responsibility, and deterring misbehavior in dynamic networks.17

Accidental vs Intentional Misbehavior, Who Cares?

When components are composed so they may cooperate, they may destructively interfere, whether accidentally or maliciously. We call accidental interference bugs. Concern with bugs has driven software engineering from its beginning. To better support cooperative composition, while minimizing bugs and their impact, we invented modularity and abstraction mechanisms.

Encapsulation is the protection of the internals of an abstraction from those it is composed with. These are the boundaries of software engineering. APIs are specialized languages of requests, for the clients of the abstraction to make abstract requests of an implementation of the abstraction. These are the channels. The API is across an abstraction boundary, where the requests are abstracted both from the multiplicity of reasons why a client might want to make the request and the multiplicity of ways in which the request can be carried out.

Human institutions are abstraction boundaries. The abstraction of “deliver this package” insulates the package delivery service from needing to know your motivation. It also insulates you from needing to know how their logistics work. You can reuse the “package delivery service” concept across different providers, and they can reuse it across different customers. In both cases, we all know the API-like ritual to follow, even when dealing with new counterparties.

The modularity and abstraction mechanisms of software engineering have enabled deep cooperative composition while minimizing the hazards of accidental interference. The richness of modern software is in large measure due to this success.

We call intentional interference attacks. Unlike much of the rest of computer security, the ocap approach does not see bugs and attacks as separate problems. OCaps provide modularity and abstraction mechanisms effective against interference, whether accidental or intentional. The ocap approach is consistent with much of the best software engineering. Indeed, the ocap approach to encapsulation and to request making—to boundaries and channels—is found in cleaned-up forms of mostly functional programming18, object-oriented programming19, actor programming20, and concurrent constraint programming.21 The programming practices needed to defend against attacks are “merely” an extreme form of the modularity and abstraction practices that these communities already encourage. Protection against attacks also protects against accidents.

Accommodate Legacy

Our society rests on an entrenched software infrastructure representing a multi-trillion dollar ecosystem. But it is all built on the wrong security premises, and so, ideally, should be replaced. However, even if our continued existence depends on replacing it, that will not happen. We may go extinct first. Fortunately, that’s not the only way to cope with this legacy of insecurable software. There are many effective ways to mix new secure systems with legacy ones, each of which may ease this transition. Within secure architectures, we can create confined but faithful emulations of the insecure worlds in which this legacy software runs.

Running legacy software in confinement boxes lets us continue using it, without endangering everything else. Capsicum embeds an ocap system within a restricted form of the Unix operating system.22 The seL4 rehosting of Linux lets legacy Linux software run in seL4 confinement boxes. CHERI adds hardware ocap support to existing CPU architectures, and is shipping in recent ARM chips.23

JavaScript is widely used and widely recognized as insecure. Recognizing JavaScript’s growing importance, in 2007 Doug Crockford convinced author Mark Miller to join him on the JavaScript standards committee. Their intent was to help shape JavaScript into a language that smoothly supports ocap-style secure programming; an effort Miller has continued ever since.24 It turns out that SES, a secure ocap system, can make the language secure while still running much of the existing JavaScript code. Experience at Google, Salesforce, MetaMask, Node, Agoric, and others confirms that much existing JavaScript code runs under SES compatibly. MetaMask leverages this to create a framework for least authority linkage of packages, substantially reducing software supply chain risks.

Reduce Risks of Cooperating, Recursively

Secure foundations are necessary, but are far from sufficient. Our software comes in many abstraction levels, and solves an open-ended set of new problems, each of which can introduce vulnerabilities. We cannot hope for perfect safety in general, even as an ideal to approach. Cooperation inherently carries risks. However, we can approach building cooperative architectures in ways that systematically reduce these risks.

The nesting of boundaries and channels is key to tremendously lowering our aggregate risk. By practicing these principles simultaneously at multiple scales, we gain a multiplicative reduction in our overall risk. To explain this, let’s visualize an approximation of overall expected risk as an attack surface. None of the following visualizations are even remotely to scale, even as approximations. Instead, they are purely to illustrate a qualitative argument about quantities we have no idea how to quantify.

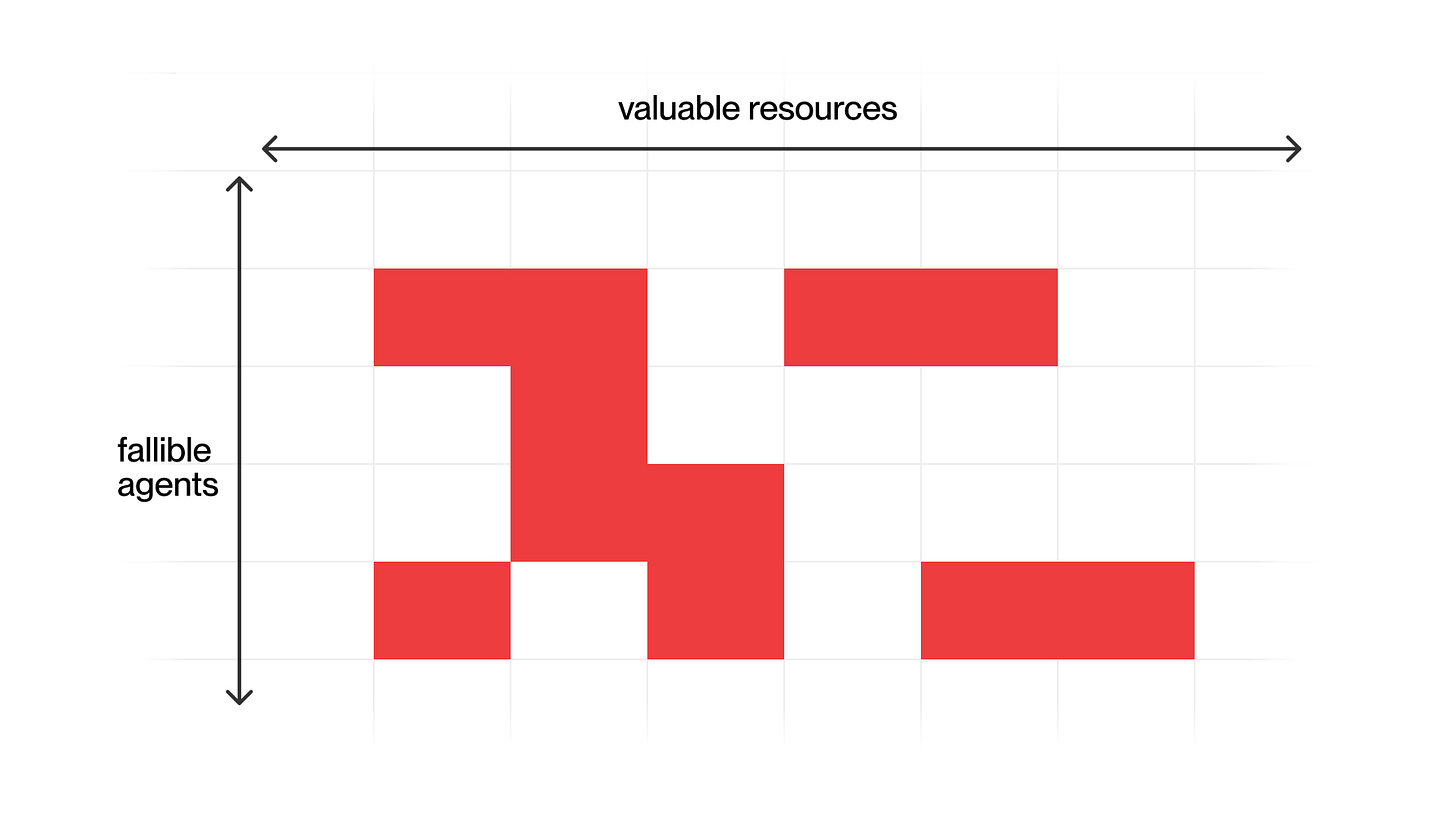

To visualize our aggregate risk, let’s start by observing that expected risk is just the flip side of expected value, where the values are negative. Anticipating automated attacks, we should assume any exploitable vulnerability is eventually exploited. So for each possible vulnerability, our expected risk is the probability that it is actually exploitable times the damage that could be done by exploiting it. To approximate these, we start with proxy measures.

For the possibility of an exploitable vulnerability, we substitute a fallible agent. This agent may be malicious, or may simply have a flaw letting an attacker subvert it. For the damage that could be done by exploiting a vulnerability, we substitute the valuable resources that could be damaged by that fallible agent. The red squares are where a given agent has access to a given valuable resource.

With the agents as rows, the resources as columns, and “has access” approximating “could damage”, this is the classic access matrix for analyzing access control systems. The total red surface area is our total aggregate expected risk, or attack surface. To become safer, we need to reduce the red surface area. To support safer cooperation, we need to remove red with as little loss of functionality as possible.

At any one scale, we can remove red by a variety of techniques. The principle of least authority removes red horizontally—it gives each fallible agent only what it needs for its legitimate duties. Placing legacy in confinement boxes lets one give that box as a whole only the external world access it needs for its confined software, again reducing red horizontally. Code reviews, testing, and especially formal verification shrink the height of each fallible agent row, i.e., its likelihood of misbehaving, thereby reducing red vertically. However, limited to one scale, even all these techniques together still leave too much red.

The two dimensional fractal Menger Sponge helps us visualize the benefits of reducing red simultaneously at multiple scales. At any one scale, only 1/9 of the red was removed, leaving 8/9. However, as we continue removing red at finer scales, the remaining surface is 8/9 times 8/9 times 8/9 etc., asymptotically approaching zero total surface area. This suggests that recursive application of our techniques could approach zero aggregate risk. Alas, even our best case is not that good.

Applying this visualization to a case study of an historic ocap system25—the DarpaBrowser running on CapDesk written in the E ocap language—we get the following picture. At each level, there are some solid red boxes whose internal risk we cannot reduce at finer scales. The main solid red boxes are the confinement boxes containing legacy software. These legacy boxes appear at each scale. We can prevent confined legacy software from doing much damage to the world outside itself. But because the legacy software internally operates by its own principles, we cannot keep it from fouling its own nest. Wherever it appears, within that box the recursion stops.

Even with these limitations, applying our techniques recursively reduces the density of the red, tremendously reducing our aggregate risk.

Overcome Adoption Barriers

An often neglected security vulnerability is the human. Phishing can be used to get ocaps as well as passwords. People with legitimate permissions can be tricked into misusing them. Unless there is widespread adoption of secure systems, their theoretical possibility is not much use. The adoption barrier to making the world a safer place is ignored in most abstract discussions of advanced technology attack. Perhaps because one imagines once humanity is urgently faced with these dangers, society will do what needs to be done. If there are known technological solutions for the dangers, it is natural to assume those most concerned can get a majority to build, adopt, and deploy these solutions fast enough to avert disaster.

As for massive cyber attacks, one would hope that the government and industry would invest in rebuilding infrastructure on more securable, user-friendly, bases. However, after seeing how weakly the world reacted to cyber attacks that revealed massive vulnerabilities, this appears to be wishful thinking. The more likely reaction to the panic following a major breach will be directing even more effort into entrenched techniques that do not and cannot work, because those are recognized best practices. Techniques that actually could be adequate if the computational world was reconstructed from its beginning, on top of those techniques, will be seen as experimental, outside of established best practice and prohibitively expensive. Instead, we will likely procrastinate with insecure affordable patches until disaster hits.

The adoption of secure computing is being delayed because the overall software ecosystem is not “hostile enough.” Companies and institutions can be too successful when they build otherwise high quality systems implemented in insecurable architectures. Small projects can free-ride on larger projects being more attractive targets. In a world where large-scale, entrenched software projects primarily get attacked, most risk to early stage software projects is due to non-security dangers.

Therefore, for most early projects, investing in costly security is less important than other areas, such as rapid product prototyping and receiving user feedback. Additionally, when hiring employees, a small company considers the person’s additional value to the project. So with regard to security, companies generally minimize the education burden on their team by following what are considered current best practices, rather than more unusual (and more secure) techniques.

If a small project engages in the same allegedly best practices as bigger projects, it can escape attack because there are bigger targets. By the time the small project becomes large, and a serious target, it has enough capital to manage the security problem without truly fixing it. As of 2022, all large corporations manage their pervasive insecurities rather than fixing them. This is only sustainable because attacks are not extremely sophisticated yet.

A sophisticated attack would make the world hostile enough to end fragile systems, but would also severely disrupt society. On the positive side, when this higher level of attack gets deployed, the world’s software ecosystem will become hostile enough that smaller projects’ relative safety through obscurity will end because insecurable systems of all sizes will be punished early on. The downside is that this has the danger of widespread destruction of the existing software infrastructure. If a certain threshold is destroyed, it could be difficult to transition to a safer situation without a serious downturn in overall functionality of the world’s computation systems, not to mention its economy.

The problem is that a multi-trillion dollar ecosystem is already built on the current insecurable foundations, and it is very difficult to get adoption for rebuilding it from scratch. Thus, we should explore strategies to bridge from current systems to new secure ones. As mentioned in Chapter 5, in other contexts this is known as genetic takeover, a term derived from biology. In a genetic takeover, the new system is grown within the existing system, and is competitive within it. Once the new system becomes widespread enough, we can shift over to the new system, and eventually make the previous system obsolete.

A real-world analogy is how society has adapted to earthquake risk. Instead of requiring immediate demolition and reconstruction of an installed base of existing, unsafe buildings, rewritten building codes require earthquake reinforcement be gradually done as other renovations take place. Over time, the installed base becomes much safer.

Genetic takeover in the computer industry has happened before. The entire ecosystem of mainframe software rested on a few mainframe platforms, which seemed to be permanently entrenched. The new personal computing ecosystem initially grew alongside it, complementing rather than competing with the old one, but eventually mostly displaced it. Attempting to replace today’s entrenched software ecosystem is not hopeless; but it is very difficult.

Grow Secure Systems in Hostile Environments

A hopeful counterexample to the insecurable computer ecosystem is the blockchain ecosystem. The large monetary incentives to hack them invite effective red team hacker attacks, which successful systems must survive. Chris Allen summarizes this accurately: "Bitcoin has a $100 billion bug bounty”.26 When insecurity leads to losses, the players have no other recourse to compensate. Non-bulletproof systems will be killed early and visibly, and therefore only bulletproof systems will populate these ecosystems.

Bitcoin and Ethereum are evolving with a degree of adversarial testing that can create the seeds for a system that can survive a magnitude of cyberattack that would destroy conventional software.

An analogy for the idea that bad bits can be fought with better bits can be taken from John Stuart Mill’s conception about the discovery of ideas. He did not deny that bad ideas can cause harm, but he observed that we don’t have any divine knowledge of which ideas are good and which are bad. The only way we discover better ideas over time is by being willing to listen to bad ideas. The only robust protection from the damage bad ideas can cause is the better ideas that immunize us from the bad ideas, by being able to understand why they are wrong. Competition between ideas in open argumentation ends up better discovering the truth over time. With information attacks, the flaw is not in the malware or the virus, but in the software’s vulnerability at the endpoint. Bad bits only damage you because you're running insecure software.27

In this sense, even for informational communication interactions at a much lower level than ideas, messages can transmit a virus or do other harm. The answer to this harm is for the message receiver not to be vulnerable by having a different informational architecture on the receiving side. In short, instead of preventing sending damaging bits, the best answer is better bits on the receiving side—secure operating systems, programming languages, and software security all the way up.

Secure software systems like seL4 are better information. The more bad information or malware there is, the greater demand for a secure endpoint. Computation using ocap principles of voluntary interaction of digital architectures and alternatives discussed in this chapter can build a largely virus- and malware-safe system. There will always be new vulnerabilities and new attacks at upper abstraction levels. But the same iteration applies; these are best addressed by information receivers improving so as not to be harmed by the information. Building an ecosystem upon these security principles may result in a general-purpose ecosystem that can be used if the existing dominant ecosystem is destroyed. If a secure system grows enough before the world is subject to major and frequent cyberattacks, then we might achieve a successful genetic takeover scenario. We can potentially leave civilization a new architecture to migrate to, especially if migration starts before the destruction, or if the destruction is gradual and not sudden.

Chapter Summary

Physical systems rely on walls, barriers, and the rule of law to enable voluntary interactions, but digital systems have the advantage of an innate voluntarism. Ignoring the coupling with the physical world, one can only send bits, not violence, through the network, creating a fundamentally voluntary starting point for building architectures. Nevertheless, in the absence of computer security, the sent bits can still be catastrophically damaging.28 Voluntarism itself doesn’t mean risk-free cooperation. Computer security experts still put their machines on the internet, making a calculation that the benefits of cooperation by interacting there are worth the risks. Ramping up computer security can reduce risks and create a much more cooperative world.

Sound interesting? Watch Gernot Heiser’s seminar on applications of cybersecurity.

Next chapter: WELCOME NEW PLAYERS | Artificial Intelligences

The Precipice by Toby Ord.

Business Blackout: The Insurance Implications of a Cyberattack on the US Electric Power Grid by Lloyds & Cambridge Centre for Risk Studies.

In Blackout, Marc Elsberg gives a terrifying fictional, yet illustrative depiction of what kinds of collateral effects and potential for violence an extended power outage may bring.

Advanced Persistent Threat Compromise of Government, Critical Infrastructure, and Private Sector by the U.S. Cybersecurity and Infrastructure Security Agency.

Chinese State-sponsored Cyber Operations by the U.S. Cybersecurity and Critical Infrastructure Agency.

In Fragile World Hypothesis David Manheim makes the compelling case that, even without any proactive cyber war, just the gradually deteriorating insecurable computer infrastructure on which contained dangers such as nuclear weapons rely, could be sufficient to cause significant damage.

Malicious Uses of AI by the Future of Humanity Institute.

Using Formal Methods to Enable More Secure Vehicles: DARPA’s HACMS Program by Kathleen Fisher.

Trusted Platform Modules.

Hardware Security Modules.

Capability Hardware Enhanced RISC Instructions by Robert Watson, Simon Moore, Peter Sewell, Peter Neumann.

A Letter to Our Clients About Government Access to Data by Robert Weber. IBM: We Haven’t Given Any Client Data by Sam Gustin.

A2: Analog Malicious Hardware by Kaiyuan Yang, Matthew Hicks, Qing Dong, Todd Austin, and Dennis Sylvester.

Architecture of Complexity by Herbert Simon. Signals and Boundaries by John Holland.

Delegating Responsibility in Digital Systems by Mark Miller, Alan Karp, Jen Donnelley. Architectures of Robust Openness by Mark Miller.

Protection in Programming Languages by James Morris. A Security Kernel Based on the Lambda-Calculus by Jonathan Rees. How Emily Tamed the Caml by Marc Stiegler and Mark Miller.

Language and Framework Support for Reviewably-Secure Software Systems by Adrian Mettler. A Capability-Based Module System for Authority Control. Darya Melicher, Yang Qing Wei Shi, Alex Potanin, and Jonathan Aldrich.

Deny Capabilities for Safe, Fast Actors by Sylvan Clebsch et. al.

The Oz-E Project: Design Guidelines for a Secure Multiparadigm Programming Language by Fred Spiessens and Peter Van Roy. Actors as a Special Case of Concurrent Constraint Programming by Ken Kahn and Vijay Saraswat.

Capsicum: Practical Capabilities for UNIX by Robert Watson.

CHERI: A Hybrid Capability-System Architecture for Scalable Software Compartmentalization by Robert Watson, et. al.

Javascript: The First 20 Years by Allen Wirfs-Brock, Automated Analysis of Security-Critical JavaScript APIs by Ankur Taly et. al., Distributed Electronic Rights in Javascript by Mark Miller, Tom Van Cutsem, Bill Tulloh. Many elements of modern JavaScript—promises, strict mode, freezing, proxies, weakmaps, classes—are due to this effort.These elements support SES, a secure ocap system that embeds smoothly in standard JavaScript. The SES shim is a library that builds a SES system within any standard JavaScript. SES itself is a proposal advancing through TC39, and is the basis for TC53’s JavaScript standard for embedded devices. Moddable’s XS is a specialized JavaScript engine built to run TC53-compliant SES on devices. Agoric runs SES as a secure distributed persistent language (Endo on SES). This distributed system includes the Agoric blockchain, using SES on XS as a smart contract language.

Towards Secure Computing by Mark Miller. Security Review of the Combex DarpaBrowser Architecture by Dean Tribble, David Wagner. Darpabrowser Report by Marc Stiegler, Mark Miller. The Structure of Authority by Mark Miller, Bill Tulloh, Jonathan Shapiro.

In personal communications.

Computer Security as the Future of Law by Mark Miller.

How to deal with bits that are damaging on a social, economic, or psychological level in our idea-space is discussed in chapter 3.